How Edge-to-Cloud Data Fabrics and Real-Time ETL Are Transforming Healthcare IT Leadership

Explore why edge-to-cloud data fabrics and real-time ETL are redefining healthcare IT. Learn technical strategies, leadership frameworks, and real-world lessons for overcoming data gravity, accelerating analytics, and building a future-ready healthcare ecosystem.

Why data gravity is holding healthcare back

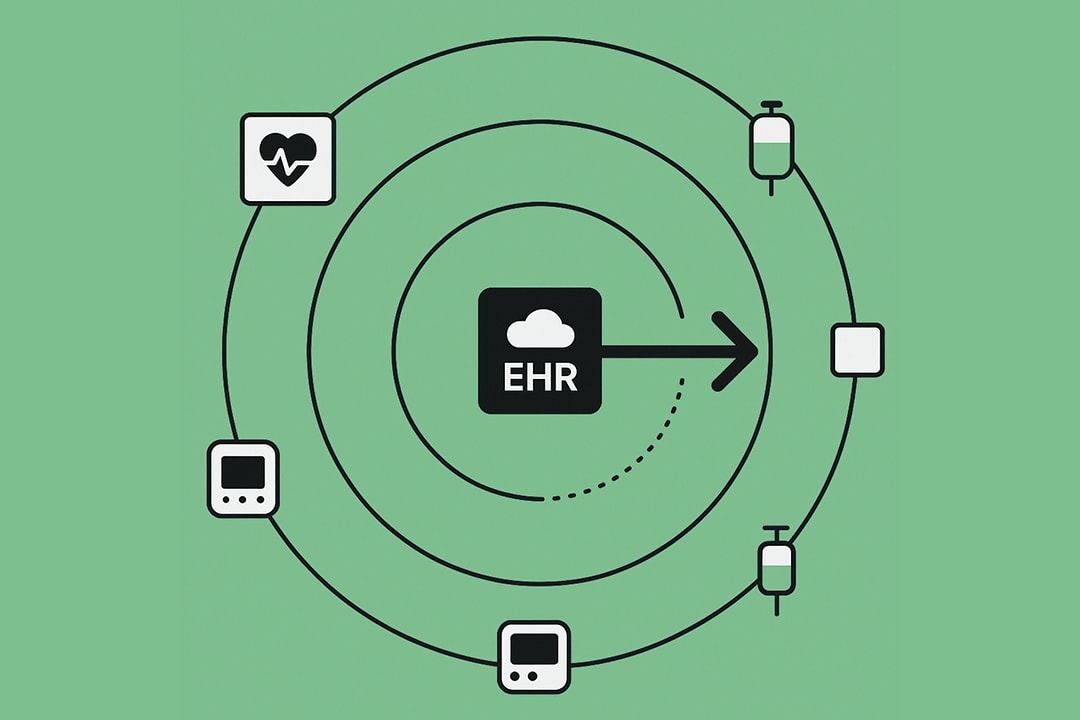

It’s no secret that healthcare data is multiplying at an almost absurd rate. From bedside monitors and infusion pumps to radiology PACS, EHRs, and a constellation of IoT devices, the modern hospital is awash in data.

But for every terabyte captured, there’s a silent challenge: getting that data to where it needs to be, when it matters. In practice, most of that information stays stubbornly close to where it was created, creating what experienced technologists now call “data gravity.”

This is not just a buzzword—it’s the stubborn reality that data, like a planet, attracts other data and applications, making large datasets hard to move or integrate across locations and clouds.

Why does this matter? Because the promise of AI-driven clinical insights, population health analytics, and even basic operational visibility depends on breaking free from these gravitational wells.

According to McKinsey, a staggering 80% of healthcare and industrial organizations now cite data gravity as the top barrier to unlocking AI and advanced analytics at scale. This isn’t about hypothetical future projects. It’s about why so many promising pilots stall before they ever reach the bedside or the boardroom.

How edge-to-cloud data fabrics are shifting the landscape

So, why is everyone suddenly talking about edge-to-cloud data fabrics? The answer is rooted in pragmatic necessity, not marketing hype.

A data fabric isn’t just a new integration tool. It’s an architecture that weaves together edge, on-prem, and cloud sources into a single, policy-driven, intelligent layer.

That means automating the movement, transformation, and governance of data in real time, whether it’s streaming from an ICU monitor or ingested from a cloud-based research registry. Leading vendors like Microsoft, IBM, and AWS are embedding these capabilities into their healthcare cloud offerings, but the core principles remain platform-agnostic: unify, automate, and secure.

Real-time ETL (Extract, Transform, Load) is the engine that keeps this fabric humming.

Unlike old-school batch ETL—think of waiting overnight for the lab results to sync—real-time ETL enables sub-second data streaming, automated normalization, and immediate availability for analytics, alerting, or AI model training.

A recent MIT Sloan study shows that edge-to-cloud architectures with automated, real-time ETL can increase analytics speed by 5-10x and cut manual data engineering work by up to 60%. For health IT leaders who have spent years wrangling CSVs and reconciling “single sources of truth,” these are not trivial numbers.

Why overcoming data gravity is a strategic imperative, not just a technical fix

The stakes could not be higher. The regulatory environment is tightening, with mandates like the 21st Century Cures Act and CMS interoperability rules demanding seamless data exchange and patient access.

At the same time, the clinical side is pushing for real-time decision support, predictive risk scoring, and automation that can’t wait for yesterday’s data to show up. Every minute spent waiting for a batch process to complete is a minute a patient could be at risk, a workflow could stall, or a compliance audit could expose costly gaps.

Add in the cybersecurity realities—where every additional copy of data or ad hoc integration becomes a new attack surface—and the case for edge-to-cloud data fabrics becomes clear. They do more than move data efficiently. They enable encrypted, policy-driven governance across every node, supporting both HIPAA and GDPR compliance by design.

How leaders are navigating the shift

Healthcare IT leadership is no longer about just keeping the lights on. The job now is to architect for flexibility, resilience, and velocity while managing risk and regulatory pressure.

Overcoming data gravity isn’t a one-time project. It’s a new operating model. The organizations making real progress are those that treat their data fabric not as a tactical integration layer, but as a strategic infrastructure that supports everything from bedside analytics to cloud-scale AI.

Getting this right is what will separate tomorrow’s industry leaders from those still stuck in the gravity well. The imperative is clear: build for data movement, not just data storage, and the rest will follow.

How edge-to-cloud data fabrics and real-time ETL are rewiring healthcare IT

Why unified architecture matters more than ever

Healthcare IT has always struggled with fragmentation. From departmental silos to a patchwork of legacy and cloud systems, the story is the same in nearly every health system: data is everywhere, but unified access is elusive.

The rise of edge-to-cloud data fabrics is rewriting this script, not by layering on more complexity, but by introducing a single, policy-driven plane that connects, manages, and governs data across edge, on-premises, and cloud environments.

A modern data fabric uses intelligent metadata, policy automation, and orchestration to discover, catalog, and unify diverse data sources.

In healthcare, this means HL7 feeds from bedside monitors, DICOM images from radiology, FHIR APIs from EHR vendors, and IoT telemetry from wearables can all be surfaced, secured, and analyzed as a single logical resource.

Major players like Microsoft, IBM, and AWS have built robust healthcare data fabrics that support this model, but the principle is the same no matter the platform: untangle the mess, automate the flow, and enforce governance everywhere data lives or moves.

How real-time ETL accelerates clinical value

Traditional ETL in healthcare is like relying on overnight mail in a world that demands instant messaging.

Batch jobs, manual data mapping, and delayed reporting are liabilities when patient safety, operational efficiency, and regulatory deadlines are on the line. Real-time ETL flips the paradigm. It extracts, transforms, and loads data as events happen, not hours or days later.

With edge-to-cloud architecture, event-driven pipelines ingest data from the point of care—think ICU monitors, smart pumps, and mobile diagnostics—then apply rules-based transformations, normalization, and enrichment on the fly.

This data is then routed to wherever it is needed: an on-prem clinical decision support system, a public cloud AI engine, or a research analytics sandbox.

The impact is measurable. According to MIT Sloan, healthcare organizations adopting edge-to-cloud architectures with real-time ETL have seen analytics run 5–10 times faster, while reducing manual data wrangling by as much as 60 percent.

That’s not just an efficiency win; it’s a new foundation for near-instant clinical insights, predictive risk stratification, and up-to-the-minute compliance reporting.

Why security and policy automation are non-negotiable

Healthcare data is among the most regulated and sensitive in the world. Moving it across environments creates new opportunities for risk—unless security, privacy, and compliance are woven into the architecture.

Data fabrics address this by embedding policy enforcement at every node, whether data is in motion or at rest. Encryption, automated access controls, and real-time audit trails are table stakes.

The best implementations go further, supporting zero-trust frameworks and federated governance across partner ecosystems.

That means not just meeting HIPAA and GDPR requirements, but also enabling granular, real-time control over who can access what, where, and when, with every transaction logged and auditable.

How technical leaders are structuring for success

Successful healthcare IT teams are moving away from project-by-project integration and toward platform thinking. They are investing in frameworks that emphasize modularity, scalability, and observability.

The modern approach is to build with open APIs and standards, enabling interoperability and future-proofing against vendor lock-in.

Teams are also prioritizing automation—deploying infrastructure-as-code, continuous integration pipelines, and self-healing data flows.

This not only accelerates deployments but also reduces human error and frees up scarce talent for higher-value work.

The organizations making the leap are not just those with the biggest budgets, but those with the discipline to architect for the future, automate where possible, and treat data as a strategic asset rather than a technical afterthought.

The payoff is faster insights, lower costs, and a foundation ready for the next generation of healthcare innovation.

How real-world results reveal the promise and limits of edge-to-cloud data fabrics in healthcare

Why healthcare’s wins are rooted in the details

The best healthcare IT stories are not told in marketing decks—they unfold on the ground, where clinical urgency, regulatory scrutiny, and operational chaos meet. Edge-to-cloud data fabrics are making measurable differences in this environment, but their real value is seen in specific, high-impact scenarios.

Take real-time clinical decision support. Hospitals that have implemented streaming ETL and unified data fabrics are now able to surface early warning scores for sepsis, cardiac risk, or deterioration in near real time.

Instead of waiting for a nightly batch job, care teams receive alerts as soon as data patterns signal a problem, shaving minutes off response times that can mean the difference between recovery and escalation.

According to a recent MIT Sloan study, these organizations report analytics runtimes that are 5 to 10 times faster than before, with a 40% to 60% cut in the manual effort required for data prep and reconciliation.

On the research side, cloud-scale AI training with de-identified, harmonized patient data is accelerating discoveries in everything from rare disease genomics to population health.

Health systems using data fabrics as their backbone are able to support regulatory-mandated interoperability, automate consent management, and deliver trustworthy datasets for both in-house and external research, all while maintaining a defensible audit trail.

How success depends on more than the technology stack

It’s tempting to believe that deploying a data fabric or edge-to-cloud pipeline is a silver bullet. In reality, outcomes hinge on a mesh of technical, organizational, and process factors that must align.

Start with high-value pilots. The most successful organizations don’t try to “boil the ocean.” They begin with focused use cases—such as ICU monitoring, readmission risk scoring, or imaging analytics—where the ROI is clear and the data flows are well understood. Quick wins build momentum and trust across clinical and technical teams.

Automate governance and observability. Real-time data movement only creates value if it is trustworthy. Automated data quality checks, lineage tracking, and policy enforcement are essential. Leading implementations tie these into their ETL and data fabric layers, creating closed feedback loops for continuous improvement.

Build for adaptability and open integration. Healthcare’s vendors and standards are always evolving. Teams that invest in API-first, standards-based architecture avoid getting stuck with brittle, one-off integrations that can’t scale or adapt.

Foster cross-functional collaboration. IT, clinical operations, analytics, and compliance must all be at the table. The technical lift is significant, but the change management lift is often the harder part. Organizations that treat their data strategy as a living, shared asset—rather than IT’s problem alone—see far better adoption and resilience.

Why challenges still demand pragmatism and patience

For all the progress, edge-to-cloud data fabrics are not a cure-all. Data quality at the edge remains a chronic issue. If the telemetry from a bedside device is noisy, incomplete, or mis-mapped, streaming it to the cloud with real-time ETL just produces bad insights faster.

Schema drift—when source systems change their formats without warning—can break pipelines and sow distrust if not caught early.

Integration with legacy systems is another persistent challenge. Many hospitals still run critical applications on outdated infrastructure that resists modern APIs and data models.

Retrofitting these into a real-time, policy-driven fabric often requires custom adapters, careful validation, and a willingness to iterate.

Finally, there’s the human element. Skills shortages in data engineering, governance, and DevOps are real. Siloed teams and institutional inertia can derail even the best-laid technical plans. Successful organizations invest as much in upskilling, change management, and communication as they do in new tools.

How IT leaders can turn hurdles into opportunities

The organizations that thrive are those that see these challenges not as roadblocks but as invitations to build smarter processes, forge stronger partnerships, and continuously evolve their approach.

Edge-to-cloud data fabrics are a journey, not a destination. The real competitive advantage lies in getting better at the journey—measuring, adapting, and never losing sight of the end goal: better care, faster insights, and a healthcare system that works as seamlessly as the data it runs on.

How healthcare IT leaders build edge-to-cloud success: A practical playbook

Why strategic leadership makes the difference

Technical innovation only becomes meaningful in healthcare when it’s guided by leadership that understands both the urgency of today’s workflows and the complexity beneath the surface.

Edge-to-cloud data fabrics and real-time ETL can transform care, but only if they are architected, governed, and evolved with discipline. The role of IT leadership is not just to select the right tools, but to create the conditions where data moves at the speed of care—securely, transparently, and with purpose.

How to architect for velocity and trust

Map the current gravity wells.

Start with a clear-eyed inventory of where data is stuck. Which systems, devices, or departments act as bottlenecks? Where does manual reconciliation steal hours from analytics or clinical teams? Use this map to prioritize integration and automation efforts that will have the greatest real-world impact.

Pilot with intent, not just ambition.

Focus on use cases that are high-stakes but manageable—real-time patient monitoring, predictive readmission risk, or imaging analytics. Scope tightly, instrument everything, and measure both technical and clinical outcomes. Treat every pilot as a learning lab, not a proof of concept destined for the shelf.

Automate governance and observability from day one.

Build policy enforcement, data lineage, and quality monitoring into every pipeline. The playbook here is “trust but verify”—make every data move or transformation auditable, and ensure compliance is proactive, not reactive.

Insist on open standards and modularity.

Avoid the trap of vendor lock-in by prioritizing architectures that are API-driven, standards-based, and future-proof. The healthcare ecosystem will keep evolving—your data fabric should be able to evolve with it, integrating new sources, destinations, and partners without major rewrites.

Invest in talent and cross-functional fluency.

The most effective teams pair technical depth with clinical, operational, and regulatory literacy. Upskill data engineers in healthcare workflows, and ensure clinicians and compliance staff understand what’s newly possible. The best-run organizations make data everyone’s business, not just IT’s.

How to turn setbacks into sustainable progress

No playbook survives first contact with the real world unchanged. Integration headaches, schema drift, and shifting regulatory targets will test even the best-laid plans. The key is to treat setbacks as feedback, not failure. Build agile feedback loops, foster a culture of blameless retrospectives, and keep the lines of communication open between IT, clinical, and executive teams.

The EDGE framework for healthcare IT leaders

End with a simple, actionable framework:

Evaluate: Map data gravity, workflow friction, and compliance needs

Design: Architect modular, standards-based edge-to-cloud data fabrics

Govern: Automate policy, security, and data quality end-to-end

Evolve: Pilot, measure, iterate, and scale with stakeholder buy-in

Why the future favors the prepared

The journey to edge-to-cloud maturity is not a sprint; it is a continual process of learning, adapting, and leading. Organizations that treat their data fabric as strategic infrastructure, not tactical plumbing, will be the ones that move faster, innovate more safely, and deliver real value to clinicians and patients alike.

Leadership is what turns new architectures into better outcomes. In a landscape this complex, that’s the one competitive edge that will always matter.

FAQs

What is an edge-to-cloud data fabric in healthcare?

An edge-to-cloud data fabric is an architectural approach that unifies and manages healthcare data across edge devices, on-premises systems, and cloud platforms.

It provides a single, intelligent layer for real-time data movement, transformation, and policy enforcement, enabling seamless analytics and AI in clinical and operational workflows.

How does real-time ETL improve healthcare analytics?

Real-time ETL (Extract, Transform, Load) automates the ingestion, normalization, and integration of healthcare data as events occur, instead of relying on overnight batch processes.

This dramatically accelerates analytics, enabling near-instant clinical decision support and reducing manual data engineering by up to 60 percent.

Why is overcoming data gravity important for healthcare IT?

Data gravity refers to the difficulty of moving and integrating large volumes of distributed healthcare data. Overcoming it is crucial for supporting AI, real-time analytics, and regulatory compliance.

Data fabrics help break down these barriers by automating data movement, unifying access, and improving interoperability across systems.

What are the main challenges in implementing edge-to-cloud data fabrics in healthcare?

Common challenges include integrating legacy systems, ensuring data quality at the edge, managing schema drift, addressing security and compliance requirements, and bridging skill gaps between IT, clinical, and analytics teams. Successful implementations require strong governance, open standards, and cross-functional collaboration.

How can healthcare organizations get started with edge-to-cloud data fabrics?

Begin by mapping current data silos and bottlenecks, piloting high-value use cases (like real-time patient monitoring), investing in platforms with built-in automation and observability, and prioritizing open standards for future interoperability.

Strong leadership, change management, and continuous measurement are key to long-term success.