How AI is Forcing a Rethink of Cloud, Storage, and Data Fabrics

Discover how AI-optimized storage architectures and edge-to-cloud data fabrics are transforming enterprise IT. Learn why NVMe-over-Fabrics, object storage, and automated data tiering deliver 10x faster performance and 30-50% cost savings for AI workloads.

Why AI and data gravity are breaking old storage models

Legacy storage architectures, once good enough for batch ETL jobs and routine data dumps, are now showing their age.

In an era where AI and machine learning demand real-time access to petabytes of data, the cracks are impossible to ignore.

The culprit isn’t just volume, it’s velocity, variety, and the relentless push for parallel processing. Throw in a few GPU clusters and suddenly, that trusty SAN/NAS setup starts gasping for throughput.

According to IDC, organizations deploying traditional arrays for AI workloads are hitting latency ceilings 30–50% higher than those using NVMe-over-Fabrics or scale-out object storage.

The result?

Data scientists waiting for hours to load datasets, inference pipelines stalling, and operations teams forced to cobble together workarounds that feel more like patching a leaky boat than steering a modern ship.

The business impact isn’t theoretical: Gartner’s 2024 research notes that 70% of enterprises cite “data infrastructure bottlenecks” as the main reason AI projects get delayed or quietly abandoned.

Why data gravity means centralized cloud isn’t always the answer

Here’s the twist: moving everything to the cloud doesn’t magically solve these problems.

As the volume and sensitivity of data at the edge—think IoT in manufacturing, medical imaging in healthcare, or real-time transaction logs in retail—skyrockets, the cost and complexity of shuttling terabytes back and forth become unsustainable.

This is the data gravity paradox: the more valuable and distributed your data, the harder it is to move, and the more the infrastructure needs to flex around where the data is actually generated and consumed.

McKinsey reports that 80% of industrial and healthcare leaders now rank “data movement and latency” as the top barrier to scaling AI. MIT Sloan points to a 5–10x improvement in analytics response times when organizations deploy edge-to-cloud data fabrics instead of relying solely on central warehouses.

In the real world, this means critical decisions—be it a predictive maintenance alert or a life-saving diagnostic—happen where the data lives, not in some faraway cloud region.

The stakes for IT leaders

For IT leaders, this isn’t just a technical challenge, it’s an existential one.

The choice is clear: adapt to architectures purpose-built for AI and data gravity, or risk becoming the department of no.

It’s not about chasing shiny objects; it’s about equipping teams with the storage and data fabrics that keep AI projects alive, stakeholders happy, and the business on the front foot. Old models aren’t just creaking, they’re breaking, and the smartest teams are already building what comes next.

Why NVMe-over-fabrics is the backbone for modern data stacks

Enterprise IT teams aren’t moving to AI-ready storage because it’s trendy, they’re doing it because legacy SANs and NAS boxes simply can’t keep up.

AI workloads, especially those powered by GPUs, are famously data-hungry and unforgiving of latency. NVMe-over-Fabrics (NVMe-oF) changes the equation by offering direct, parallel access to storage nodes at memory-like speeds, not just in the lab but at scale.

IDC’s latest numbers show NVMe-oF adoption surging at a 32% CAGR, with reported IOPS up to 8x that of traditional arrays.

End users are seeing 10x faster access and 30–50% lower latency, not in vendor demos but in real-world pipelines.

Companies like VAST Data and Pure Storage are setting the pace, delivering architectures that can saturate AI clusters with data fast enough to keep every GPU core busy—no more idle silicon, no more frustrated data engineers.

How scale-out object storage powers AI at scale

Enterprises are also embracing scale-out object storage, not just for backup and archiving, but as a first-class citizen for AI and analytics.

Why? Object stores provide multi-petabyte scalability, S3 compatibility, and seamless integration with modern AI frameworks. Forrester’s recent survey found that 60% of organizations refreshing their storage in 2024 list “AI-readiness” as a top-three driver.

It’s not just about capacity.

Modern object storage supports hot/cold tiering, automates lifecycle management, and can serve up training datasets to hundreds of distributed workers at once.

In practice, this means teams can scale experiments without hitting arbitrary limits—and without blowing the budget on over-provisioned flash.

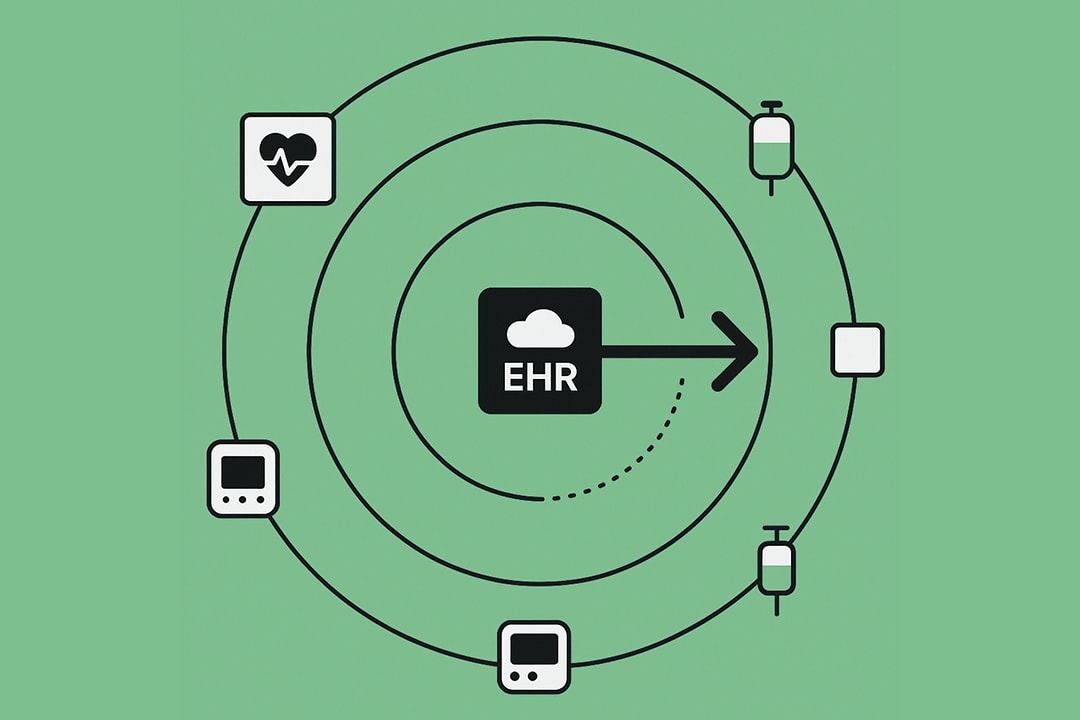

How edge-to-cloud data fabrics are closing the analytics gap

But storage alone isn’t enough. The real unlock comes from edge-to-cloud data fabrics, platforms that automate data placement, movement, and real-time ETL across edge, on-prem, and cloud.

Instead of shoveling all data to a central lake, these fabrics let organizations analyze what matters where it matters, slashing latency and cloud egress bills.

The numbers are compelling: MIT Sloan reports 5–10x faster analytics turnaround and 40–60% less manual engineering when data fabrics are in play.

Leading platforms use policy-driven automation to keep hot data close to compute, move cold data to low-cost tiers, and stitch everything together with a unified metadata layer.

For manufacturing and healthcare, this means real-time insights at the edge—without sacrificing the ability to train models or run deep analytics in the cloud.

What this means for leaders

Adopting AI-optimized storage and fabrics isn’t about jumping on the latest bandwagon, it’s about removing friction so AI and analytics teams can deliver.

The best IT shops are deploying NVMe-oF for raw speed, object storage for scale and flexibility, and data fabrics to orchestrate data flow across the enterprise.

This is what turns AI from a science project into a production engine, and what keeps IT in the driver’s seat, not stuck in the slow lane.

What IT leaders should do now to future-proof their data and storage

How to build a roadmap for next-gen storage and data fabrics

Future-proofing starts with ruthless self-assessment. Map out which workloads are already pushing the limits: AI pipelines, real-time analytics, edge data streams.

Identify where bottlenecks are holding up business outcomes, not just technical metrics. The smart move is to pilot NVMe-over-Fabrics or scale-out object storage on a high-value use case, then scale based on measurable wins: throughput, latency, time-to-insight, cost per gigabyte served.

Don’t fly blind. Use established frameworks (SNIA, Open Compute Project) to benchmark and validate architecture choices.

Governance needs to keep pace: align your roadmap with regulatory shifts—GDPR, HIPAA, SBOM requirements—and AI governance policies. Build in regular check-ins to adapt to new mandates or business pivots.

Why ignoring legacy modernization is a strategic risk

Technical debt is more than an IT headache—it’s a business liability.

Legacy storage and data pipelines increase breach risk, slow down AI adoption, and drag down agility.

The cost isn’t just in dollars—it’s in lost opportunity and credibility. Organizations that keep deferring modernization end up with brittle “Frankenstacks” that no one wants to own.

The solution is phased but deliberate: start by wrapping legacy systems with API gateways, then offload or refactor to microservices and cloud-native platforms.

Don’t try to boil the ocean, tackle the most painful, high-impact systems first.

The goal is a storage and data foundation that can flex with new workloads and business demands, not one that requires heroics to maintain.

What the future holds for cloud, edge, and AI storage

The next wave is already forming: exabyte-scale object stores, federated learning architectures, and confidential computing for data-in-use privacy.

Post-quantum cryptography is moving from theory to pilot in some regulated sectors. IT leaders who invest now in flexible, modular architectures will have the edge—literally and figuratively—when the next disruption hits.

The cultural shift matters as much as the tech. Upskilling is non-negotiable: “AI fluency” has to reach storage admins, data engineers, and—yes—the business side.

Cross-functional teams are the norm; silos are a liability.

How to lead change and get buy-in from stakeholders

Change doesn’t happen by decree.

The best leaders translate technical wins into business impact—shorter time to market, new revenue, risk reduction.

Use stories, not just stats. Show how a storage upgrade enabled faster product launches or improved patient outcomes.

Phased rollouts build trust: land a few quick wins, then expand. Involve stakeholders early and often. Make the case for why modernization matters—not as a technical upgrade, but as a strategic enabler for everything the business wants to do next.

In this landscape, waiting is the only sure way to fall behind. The future is being built on fast, flexible, AI-ready data platforms—IT leaders who act now will own it.

FAQ

What is NVMe-over-Fabrics and why is it important for AI workloads?

NVMe-over-Fabrics (NVMe-oF) is a network protocol that allows direct, parallel access to storage at memory-like speeds over Ethernet or InfiniBand. It's critical for AI workloads because it delivers up to 10x faster data access and 30-50% lower latency than traditional storage area networks (SANs). This means AI models can train faster, GPUs stay busy rather than waiting for data, and inference pipelines run more efficiently.

How do edge-to-cloud data fabrics reduce costs and improve performance?

Edge-to-cloud data fabrics automate the movement of data between edge devices, on-premises systems, and cloud platforms based on policy. They reduce costs by keeping hot data local (avoiding expensive cloud egress fees) while automatically tiering cold data to lower-cost storage. Performance improves because analytics happens where the data is generated—MIT Sloan reports 5-10x faster analytics and 40-60% less manual engineering effort with these architectures.

What are the main differences between object storage and parallel file systems for AI?

Object storage excels at massive scale (exabytes), S3 compatibility, and long-term data management with features like versioning and lifecycle policies. It's ideal for large unstructured datasets. Parallel file systems deliver higher performance (up to 80 GB/s per node) with POSIX compatibility and are optimized for high-throughput, random access patterns common in AI training. Many organizations use both: object storage for data lakes and archives, parallel file systems for active AI workloads.

How can IT leaders build a business case for modernizing legacy storage systems?

Build your business case around measurable outcomes: reduced time-to-insight for analytics, faster AI model training, lower total cost of ownership, and decreased security risk. Quantify the cost of delays and missed opportunities from storage bottlenecks. Start with a high-value pilot project to demonstrate ROI, then use those metrics to justify broader modernization. Frame the discussion around enabling business initiatives rather than technical upgrades.

What skills do IT teams need to implement AI-optimized storage and data fabrics?

Teams need a blend of traditional storage expertise and newer skills: containerization, infrastructure-as-code, API management, and data engineering fundamentals. Knowledge of AI/ML workflows helps teams optimize for those workloads. Cloud architecture skills are essential for hybrid deployments. Most importantly, IT professionals need to understand data governance, security, and compliance requirements as these architectures span multiple environments and jurisdictions.